Speed of Data

Speed of Data

Robert Koch, Enterprise Architect

I’ve been thinking about this topic for quite some time and while I’m putting this into this blog it probably be only a part of a larger discussion. I wanted to talk about the speed of the data as it traverse through your business systems. As you know data sitting there has no intrinsic value. Quality checks slows down the speed of data thus lessening it’s value. Some places who might be spitting out data in the raw  and would have an “unverified” stamp on their data so their customers know that the data is received but not validated yet. Much of the data I work with in my career are verified after it goes through a thorough review. The review process will take time since there are human elements involved doing the verifying. If you think about it, even when the data validation is completely automated, don’t forget that the process is designed by humans, programmed by humans, tested by humans, and used by humans. Basically it cannot NOT be touched by humans! Not every scenario can be accounted for at the time of testing so there’ll be a curve ball thrown while validating the data on a future day. Today, we might not know what yet. Again, still involves that pesky human element.

and would have an “unverified” stamp on their data so their customers know that the data is received but not validated yet. Much of the data I work with in my career are verified after it goes through a thorough review. The review process will take time since there are human elements involved doing the verifying. If you think about it, even when the data validation is completely automated, don’t forget that the process is designed by humans, programmed by humans, tested by humans, and used by humans. Basically it cannot NOT be touched by humans! Not every scenario can be accounted for at the time of testing so there’ll be a curve ball thrown while validating the data on a future day. Today, we might not know what yet. Again, still involves that pesky human element.

Most of the data end users see (or prefer to see) are data that is usually aggregated and visualized. Aggregations over raw data helps us see trends right away and can detect errors out of the bat. For example, new data coming in may be way off compared to yesterday’s data but you cannot see it when comparing huge volumes of raw data (thus too much static). When we aggregate as groups, we can see anomalies better. Another way to verify data is to use visualizations – charts – showing unexpected swings. Visualizations helps highlight differences that can be caught by the naked eye. Again, with end users verifing the data, no matter how much we try to automate validations from start to end, we need to build in intuition – the capability of having a “bad feeling.”  Even with a great AI that helps drive the car for you, although tested up the wazoo, we will still have minor flaws that causes accidents or scenarios that we didn’t account for. Regardless, we (humans again) are constantly learning from it and making our data better and more intelligent for the consuming systems.

Even with a great AI that helps drive the car for you, although tested up the wazoo, we will still have minor flaws that causes accidents or scenarios that we didn’t account for. Regardless, we (humans again) are constantly learning from it and making our data better and more intelligent for the consuming systems.

How can we succeed in automating validation? I’m of the opinion that we need to create an completely independent and separate system to digest the data and make sure it makes sense within human-defined boundaries since the AI won’t be much help at this point. It’s a given that AI is dumb until we make it smarter! APIs exposed to the datasets are an ideal approach where we may tap into the data source and independently verify it. Our systems may be tainted or opininated with a scrum team that programs in, say, in C# and then the automated process is done using the same language and the team’s opinionated thinking. We should be crafting validation intelligence with a completely different program and team; maybe a program using Python with Pandas/Dask or perhaps use Spark that forces us to view numbers in a different way. From there we can identify potential flaws in the datasets being analyzed. Years ago before I got involved with software development, I would develop a verification workflow to verify the data being produced using CheckFree’s Recon-Plus software (I think it’s called Fiserv Frontier https://www.fiserv.com/en/solutions/risk-and-compliance/financial-control-and-accounting/frontier-reconciliation.html or something now). The software can highlight differences after running through it’s comparison process. The data compared was produced by a Java-based system and using a different person and different toolset enables us to be unbiased when looking for discrepancies.

Since we’re alway verifying the data with predefined quality control in place, the speed of the data will slow to a crawl that may not placate the customers, internal or external. Some days there may be a bad data discrepancy putting a halt on the whole validation process, thus the datset becomes embargoed. Now we have a pause in the workflow which decreases the value of the data with every passing minute. Someone would have to go in and clean up and fix the data and make the process flow again. This is an on-going challenge for any shop that gets it’s rewards based on the timeliness of the data.

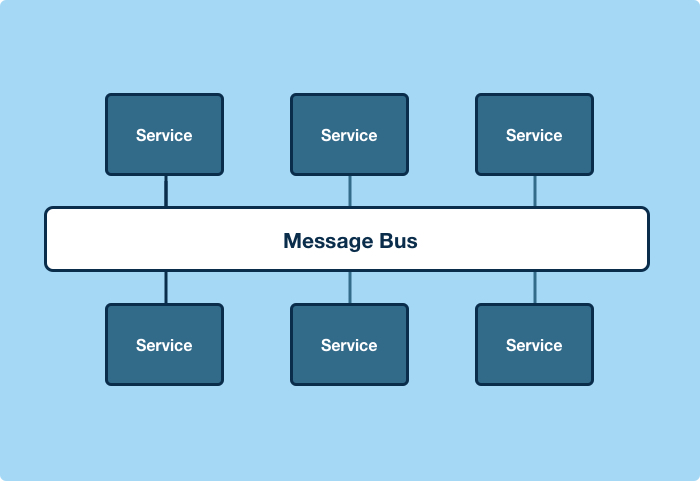

How may we get the speed of data to increase in light of all those barriers,  be it quality, validation, or embargoes in place? One way is to convert the system into a more event-driven system where you’d have data coming in real-time and conduct aggregations on the fly. When we break down data into minuate and analyze it piece by piece as they come in, we can do quicker quality checks using algothrim-driven evaluations to ensure the validity. Compare that to a batch-driven system where huge volume of records has to be analyzed as a whole and if you tried to do it piecemeal, performance can be degraded to the point where it may not be worthwhile. So again, using an enterprise message bus, we can analyze data as it comes in and if there’s a new scenario that we haven’t thought of but want to implement and check for, we can just plug in the new algorithim in the message bus pipeline without adversely affecting performance or causing bottlenecks. This is a great example of how event-driven systems makes it easier for disparate development teams to come together. Granted, setting up an event-driven system is a huge undertaking and frought with mistakes and stalls due to learning curves, organizational dynamics, and prioritzation, however once it is in place, you’re in a prime position to leverage the advantages of being event-driven.

be it quality, validation, or embargoes in place? One way is to convert the system into a more event-driven system where you’d have data coming in real-time and conduct aggregations on the fly. When we break down data into minuate and analyze it piece by piece as they come in, we can do quicker quality checks using algothrim-driven evaluations to ensure the validity. Compare that to a batch-driven system where huge volume of records has to be analyzed as a whole and if you tried to do it piecemeal, performance can be degraded to the point where it may not be worthwhile. So again, using an enterprise message bus, we can analyze data as it comes in and if there’s a new scenario that we haven’t thought of but want to implement and check for, we can just plug in the new algorithim in the message bus pipeline without adversely affecting performance or causing bottlenecks. This is a great example of how event-driven systems makes it easier for disparate development teams to come together. Granted, setting up an event-driven system is a huge undertaking and frought with mistakes and stalls due to learning curves, organizational dynamics, and prioritzation, however once it is in place, you’re in a prime position to leverage the advantages of being event-driven.

Event-driven systems adds speed and value to the incoming data as it moves within your workflow. The data is always moving and being streamed somewhere. Imagine for a minute, you’re watching the World Series on television, and there’s an intentional tape delay of 30 minutes – it’s not too bad, right? However, will the viewer appreciate the delay or will they turn off the television and monitor web-based real-time statistics? I would say as a baseball fan myself, this would turn me off as a viewer and instead do something else while glancing at real-time statistics. I’m hoping this analogy helps you understand the importance of keeping the data (in our case, the video stream) flowing in real-time and moving to the end user, AKA those who spend the money.

Data fits perfectly in economic theory. Let’s say you deposit $1,000 in the bank, the banker would loan out $800 to someone else, thus the bank creates $1,800 circulating in the economy. Same way with your data. You have ingested data into your system, the value designated as X, then system spits out added value to customers, that’s Y, so now we have X + Y value in your data stores.  Data, as it traverses thorough your system, being augmented and shared – and the more it get attached to other processes, the value of the said data can be tripled or quadrupled at that point.

Data, as it traverses thorough your system, being augmented and shared – and the more it get attached to other processes, the value of the said data can be tripled or quadrupled at that point.

Data is often known as the “truth”. When you engage in truthful endeavours, everyone benfits. The ingestor and consumer adds urgency and value to the data. We always have immutable and malleable phases of the data, these hopefully gets recorded and auditable. Making the data auditable while maintain the speed it traverses in your organization is important – for example, some may be using blockchain as an example of data that should not be immutable and recorded in rigid and sequential form. Regulators loves the technology as it makes the data more truthful and there’s a trusted audit trail. In the finance world, auditors who check the client company’s books is a statisitical probablility exercise. We have the power to make the entire data truthful, thus valuable.

Cloud technologies makes it easier to do the above since we won’t have to wait too long to provision services and with data traveling at the speed of light (or as close to it). We can orchestrate the best available tool for the job quickly through the cloud since servers do not have any inherit limitations, e.g. disk space, network bandwidth, proximity between services, and shared security. Cloud-based tooling works well provided it’s available through a common API or using transititional data points such as CSV files or through the event bus. CSVs will slow the data velocity but it is a ubiquitous data format and many software tools has the capability to ingest it.

Having an event messaging bus is a great approach in keeping the data moving and adding value as it traverses, however the tooling ingesting events are more limited compared to tools that ingests CSV files. Today, investing in those messaging-based tooling to read events and to extract more value is arguably more than what you would have invested (benefit + reward > cost). Adding event bus capabilities is usually a one-time effort, then the rest is gravy. I have provided an example of the benefits of switching over from batch-based processing to event-driven processing in my prior blog post https://robcube.github.io/presentations/2018/05/04/the-case-for-event-driven-architecture.html.

Having an event messaging bus is a great approach in keeping the data moving and adding value as it traverses, however the tooling ingesting events are more limited compared to tools that ingests CSV files. Today, investing in those messaging-based tooling to read events and to extract more value is arguably more than what you would have invested (benefit + reward > cost). Adding event bus capabilities is usually a one-time effort, then the rest is gravy. I have provided an example of the benefits of switching over from batch-based processing to event-driven processing in my prior blog post https://robcube.github.io/presentations/2018/05/04/the-case-for-event-driven-architecture.html.

Being event-based does not only mean to have a messaging bus ready to go; instead we can broaden that term and use it loosely. Containers is a good example of an event-driven system where we may fire up containers to isolate workflows and to minimize impact (or blast points). When a process needs to be run and there’s memory constraints, containers can be the answer to this, either by spinning up another container to run the extra process or increasing memory on the existing containers (via a restart). Having containers can be an important aspect in having an event-based solution that helps get your data to where it needs to be as quickest as possible and in a scalable approach! Containers are great in helping increase data velocity and thus the value of data.

Going serverless (the cloud) is another way to get increasing data velocity. Because serverless components offers more resiliency and it is scalable out of the box, using the cloud framework is a good way to implement your workflow. The tooling from cloud providers are very mature nowadays and with very little effort, you can get a system up and running in no time, complete with ingestion and traversing through an event-driven pipeline, then generating artifacts and visualizations. It seems like magic, but toolsets out there are very mature and can fit your needs quickly using templated approaches.

I hope from reading this you can get some ideas on how you may increase the speed of data in your workflow while adding value to it. Your goal is to attain enlightment  with your data: it’s a hard process frought with roadblocks, but the journey there is worth it. There’s work to be done, so get to it!

with your data: it’s a hard process frought with roadblocks, but the journey there is worth it. There’s work to be done, so get to it!